“

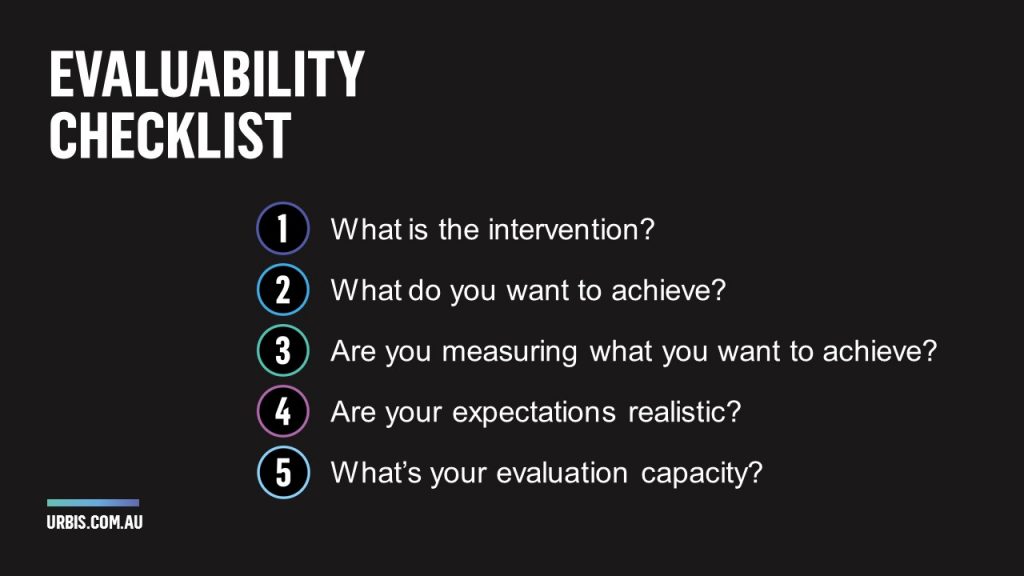

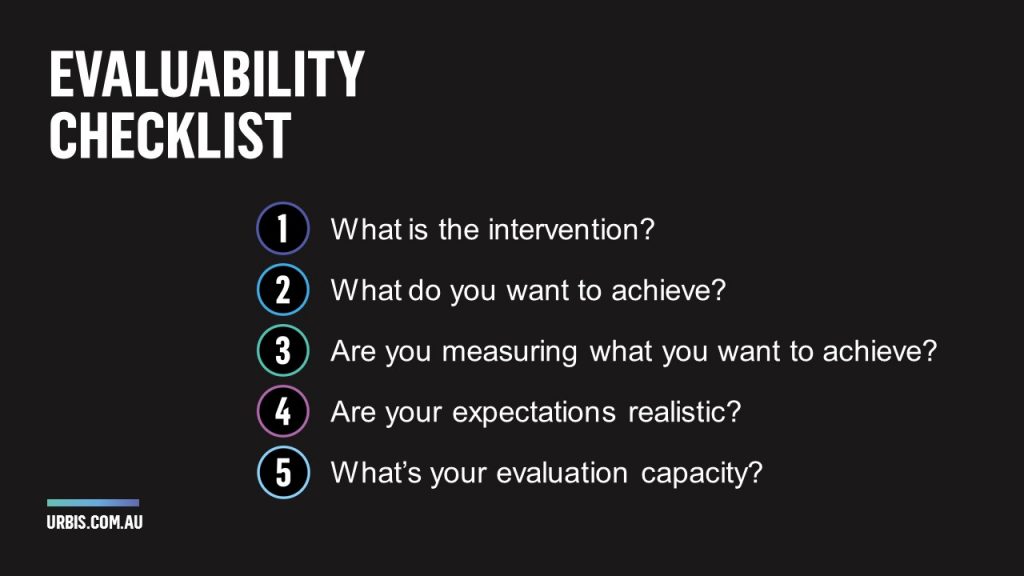

We’ve narrowed it down to five key questions that intervention designers can ask themselves when setting up their program.

At the heart of this, is a disconnect between intervention designers and evaluators. We believe that much of this could be overcome by incorporating some of the theory and knowledge from evaluation practice into intervention design.

This doesn’t need to be a complicated process. We’ve narrowed it down to five key questions that intervention designers can ask themselves when setting up their program. Doing this will improve the possibility and quality of an evaluation, but also the quality of the intervention in the first place.

- What is the intervention? It sounds simple! But make sure you have a clear scope that all the stakeholders understand and see their role in, and document it so everyone understands the logic of your intervention.

- What do you want to achieve? Think about your priorities. Your intervention won’t manage everything no matter how hard you try so what would success look like to you and your stakeholders?

- Are you measuring what you want to achieve? When you’re setting up your intervention and reporting systems, include a measure for the outcome you want to have an impact on. You will only understand the effects your intervention has had if you can see them.

- Are your expectations realistic? Make sure you’re measuring what your intervention can have an impact on. What are your timeframes for change? At what scale will change occur? Get comfortable with being more conservative about attributing change to your intervention.

- What’s your evaluation capacity? Understand who within your organisation understands evaluation. Intervention design works best when those with internal capacity work hand in hand with evaluation experts to shape outcomes.

Building evaluability into intervention design means asking questions up-front, and doing the hard work in the set up. Real magic probably doesn’t exist, and we’re probably never going to be able to work magic comprehensively evaluating a program where none of the set-up has been done. But put the props in the right place, and like any good illusionist, we’ll make the magic happen.

To learn more about the work done by Urbis’ policy and economics experts, and how they can work with you in creating truly evaluable programs to support accountability and ongoing improvements, get in touch with one of the team: